- Introduction

Navigating the Intersection of Natural intelligence and Artificial Intelligence

In recent years, the rise of artificial intelligence (AI) has sparked transformative shifts across nearly every facet of society. From healthcare and finance to education and social media, AI’s reach continues to expand, shaping how we work, communicate, and even make decisions. AI systems now power recommendation algorithms on our favorite streaming platforms, assist doctors in diagnosing complex diseases, and automate mundane yet essential tasks in workplaces. While the advantages of AI are profound, concerns are growing around how society interacts with and depends on these systems. Increasingly, AI is not just a tool but a daily influence on our behavior, thinking patterns, and collective social values.

However, this growing reliance on AI raises an intriguing paradox: as AI grows more advanced, we may be fostering a form of human cognitive complacency—what some might call “natural stupidity.” In this context, “natural stupidity” refers to our tendency to accept information and decisions uncritically, offloading tasks that would typically require judgment and reasoning onto AI systems. This trend is not a result of human intelligence declining, but rather an increasing propensity to let machines handle functions we used to manage ourselves, risking a degradation in practical intelligence and critical thinking.

The core issue here is not AI itself but how we choose to use it. As AI becomes more integrated into our lives, a vital question arises: Are we using AI as a tool to enhance our intelligence, or are we allowing it to replace our need for it? This is an important distinction. While AI can support humans in achieving more efficient, well-informed outcomes, overdependence on these systems may erode fundamental skills, such as commonsense reasoning, problem-solving, and even creativity.

Therefore, the thesis of this article is straightforward yet crucial: to thrive in an AI-driven world, we must prioritize enhancing our natural intelligence, utilizing AI as a supportive tool rather than a replacement. By maintaining a balanced relationship with AI, we can leverage its potential without surrendering our capacity for independent thought and discernment. This approach emphasizes AI’s role as an enhancer of human ability, encouraging the development of critical thinking and commonsense alongside AI’s advancement. This article will explore the nature of “natural stupidity,” examine AI’s role in it, and outline strategies for using AI to complement, rather than compromise, our human intelligence.

- Understanding Natural Stupidity

Definition and Implications

As technology continues to advance, the concept of “natural stupidity” becomes increasingly relevant. Though provocative, this term is not a judgment on intelligence but rather a description of human tendencies that lead to poor decision-making. In simple terms, natural stupidity refers to our propensity to make avoidable errors in judgment due to cognitive shortcuts, biases, or lack of critical engagement. Unlike intelligence, which often implies the potential to solve complex problems or adapt to new situations, natural stupidity highlights the opposite—our susceptibility to hasty, impulsive, or uninformed decisions, especially when presented with overwhelming or unfamiliar information.

Natural stupidity is not a fixed trait but rather a behavioral pattern shaped by societal influences, information environments, and, increasingly, digital tools like AI. In decision-making, it manifests as a failure to assess facts critically, relying instead on assumptions, surface-level information, or even groupthink. Unfortunately, as access to information expands, so do opportunities for making such errors. When people rely heavily on automated systems to inform decisions without questioning underlying assumptions, natural stupidity can escalate, eroding commonsense reasoning and reflective thought.

Societal Factors Contributing to Lapses in Commonsense Reasoning

Several societal factors contribute to natural stupidity, particularly in today’s fast-paced, information-heavy environment:

- Information Overload and Misinformation

In the digital age, we are bombarded with vast amounts of data, news, opinions, and advertisements, making it challenging to sift fact from fiction. Cognitive psychologists argue that information overload can impair decision-making by overwhelming our cognitive capacity, leading to oversimplified judgments. As a result, individuals may resort to shortcuts, relying on headlines, sound bites, or even AI-generated summaries without delving into the complexities behind them. - Echo Chambers and Confirmation Bias

With social media algorithms reinforcing content that aligns with our existing beliefs, individuals are often trapped in echo chambers where diverse perspectives are minimized. This isolation fuels confirmation bias, where people seek out or interpret information that supports their preconceptions. In this setting, the line between critical thinking and blind acceptance of ideas blurs, as individuals are less likely to question or challenge information that aligns with their worldview. - Convenience Culture and Instant Gratification

The modern preference for speed and convenience has shifted our expectations, valuing quick answers over reflective thought. Digital tools often provide instant responses, from online Q&A databases to personal assistant AIs, discouraging deeper inquiry. The immediacy of these responses cultivates a mindset where the “quick answer” becomes acceptable, leading to potentially uninformed or superficial decisions. - Dependence on Technology for Daily Problem Solving

As we increasingly rely on technology to manage basic tasks (such as navigation, calculations, or even social planning), we risk losing the mental agility these tasks once required. This dependency reinforces natural stupidity by allowing technology to substitute for cognitive effort. Over time, this reliance can dull critical reasoning skills, as people defer to digital tools even for simple decisions.

Real-World Examples of Natural Stupidity

Examining real-world scenarios can illuminate how natural stupidity influences behavior and decision-making. Below are a few instances that illustrate how this phenomenon manifests:

- Misinformation in Media Consumption

A prominent example is the rapid spread of misinformation. In recent years, misinformation surrounding health crises, political events, and scientific research has shown how natural stupidity can drive people to believe—and share—false or misleading information. The tendency to accept emotionally charged headlines or selectively edited clips without fact-checking illustrates how natural stupidity allows misinformation to thrive. - Impulsive Financial Decisions

Financial decisions provide another example, as many individuals fall prey to impulsive spending, risky investments, or scams without fully understanding the risks involved. Social media, with its blend of financial influencers and algorithm-driven content, often amplifies “get rich quick” narratives. Many users, in their eagerness for immediate returns, overlook critical due diligence, resulting in financial losses or even debt. - Overreliance on GPS Navigation

A subtler yet relatable example of natural stupidity is our reliance on GPS technology. Many people now rely entirely on digital navigation systems, to the point that they lose a sense of direction or familiarity with local geography. This overreliance can backfire, leading to situations where individuals are stranded or unable to navigate without digital assistance, highlighting how technology can sometimes weaken rather than strengthen our spatial awareness and problem-solving abilities. - Acceptance of AI-Generated Information Without Scrutiny

As AI-generated content becomes more prevalent, individuals may accept AI outputs as definitive answers without verifying the accuracy. For instance, a person may make medical or legal decisions based solely on AI-driven health advice or automated legal documents, assuming them to be accurate. This unquestioning acceptance can lead to significant consequences if the AI output contains errors or lacks context, underscoring the risks of excessive trust in AI.

These examples reveal how natural stupidity manifests when individuals rely on convenience, speed, or external sources without fully engaging in the decision-making process. As we continue integrating AI into our daily lives, the importance of cultivating commonsense reasoning becomes clear. Recognizing and mitigating these tendencies can help ensure that we use technology as an aid rather than a replacement for critical thinking.

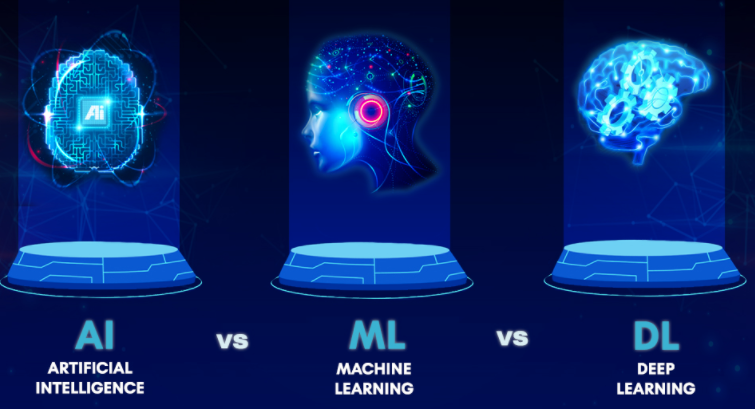

III. The Role of Artificial Intelligence

Current Utilization of AI

Artificial Intelligence has permeated nearly every major industry, transforming how we live, work, and make decisions. Today, AI applications span fields such as healthcare, education, business, and personal life, where they are used to optimize processes, enhance accessibility, and enable new forms of interaction and problem-solving.

- Healthcare

In healthcare, AI systems are used for diagnosing diseases, predicting patient outcomes, and supporting clinical decisions. For instance, image recognition algorithms assist radiologists by identifying abnormalities in X-rays and MRIs with remarkable precision. AI tools also help predict disease outbreaks, monitor patient vitals in real time, and even suggest treatment plans based on a patient’s medical history and similar case studies. These applications improve patient outcomes by offering faster, more accurate diagnoses and reducing the workload for medical professionals. - Education

In education, AI provides personalized learning experiences, adapting content and pacing to suit individual students’ needs. For example, AI-driven platforms analyze student performance to recommend resources or activities targeting specific learning gaps. This approach has improved outcomes for students, particularly in remote and underserved communities where access to traditional educational resources may be limited. Additionally, AI enables virtual teaching assistants, automated grading systems, and chatbots that help students navigate coursework, providing round-the-clock support. - Business

AI is also reshaping business practices. From automating routine customer service interactions with chatbots to analyzing consumer behavior and preferences for targeted marketing, AI tools help companies optimize operations and engage more effectively with their customers. In finance, algorithms power trading systems that process vast amounts of data in seconds, making decisions based on trends and insights that would be impossible for human analysts to achieve manually. Many businesses also use AI for supply chain optimization, fraud detection, and risk management, enhancing overall efficiency and security. - Daily Life and Personal Applications

AI is also deeply embedded in our daily routines, often in ways we may not immediately recognize. Virtual assistants like Siri and Alexa provide weather updates, play music, and even control smart home devices. Recommendation algorithms on streaming platforms suggest content based on our past viewing habits, while social media platforms use AI to curate personalized feeds. These applications create a more connected, responsive environment, but also raise questions about privacy, autonomy, and overreliance.

AI’s Influence on Commonsense

While AI’s capacity to assist and automate holds enormous benefits, it also presents unique challenges to commonsense reasoning.

- How AI Assists Commonsense Reasoning

In certain contexts, AI supports commonsense reasoning by offering users access to information and insights they might not readily obtain on their own. For example, in healthcare, AI systems provide clinicians with information on rare diseases or drug interactions that might not immediately come to mind, thereby enhancing decision-making with data-driven insights. In education, AI helps students grasp difficult concepts by recommending tailored content or providing practical examples, which can bolster understanding and reinforce foundational knowledge. In such cases, AI functions as a powerful support tool, offering additional resources to enrich commonsense reasoning. - How AI Can Hinder Commonsense Reasoning

Conversely, AI can hinder commonsense reasoning when overused or trusted without scrutiny. The primary issue lies in the increasing tendency to defer to AI-generated outputs without questioning their accuracy or relevance. For instance, recommendation algorithms on social media can lead individuals to accept information aligned with their beliefs, reducing their likelihood of critically evaluating opposing viewpoints. When individuals rely on AI to make everyday decisions—such as choosing news sources, entertainment, or even financial investments—they risk disengaging from the reflective thought process that fosters commonsense.

Overreliance on AI can foster intellectual complacency, as people become accustomed to accepting AI-generated information without challenging or investigating its validity. For example, if a student depends entirely on an AI tutor for problem-solving, they might miss out on developing problem-solving skills independently. In professional settings, employees who rely on AI for decision-making may become less engaged with the details of their work, potentially overlooking important nuances that AI may miss. This reliance can limit the ability to think critically and adapt to new or unforeseen situations.

- The Paradox of Overreliance on AI

The paradox of AI lies in its capacity to simultaneously empower and diminish human intelligence. As AI becomes more capable, it encourages individuals to offload cognitive tasks, trusting technology to fill in knowledge gaps. However, this dependency can lead to a diminished sense of agency, with individuals losing confidence in their ability to solve problems independently. In effect, as AI grows “smarter,” the average user may become less inclined to exercise commonsense or question outputs critically.

The issue is not the technology itself, but rather the way it is integrated into our lives. In delegating increasingly complex decisions to AI, society risks a passive reliance that undermines personal responsibility and critical thinking. This overreliance can have tangible consequences. For example, an AI-powered GPS may lead a driver to a dead end or dangerous route, highlighting the need for commonsense checks when navigating. Similarly, AI-based hiring tools may screen out qualified candidates if they don’t match a predefined set of criteria, underscoring the importance of human oversight in decision-making.

As we navigate the rise of AI, it is essential to strike a balance that allows us to harness AI’s strengths without compromising our ability to think independently. This balanced approach requires developing a mindset that views AI as an enhancer of human capability, rather than a replacement for commonsense reasoning. By using AI as a complementary tool, we can retain our critical faculties and ensure that technology serves to augment—not diminish—our natural intelligence.

- Enhancing Commonsense

As AI technology becomes more integrated into our daily lives, strengthening commonsense and critical thinking skills has never been more crucial. Enhancing commonsense isn’t about resisting technology; rather, it’s about cultivating a balanced, thoughtful approach to using AI. This section outlines strategies to foster critical thinking and educational initiatives aimed at nurturing a questioning mindset toward AI and developing ethical considerations around its use.

Strategies for Development

- Critical Thinking Exercises and Their Importance

Critical thinking involves the ability to analyze information objectively, identify biases, and make reasoned decisions. It’s a vital skill in a world where AI can produce vast amounts of data and recommendations, sometimes without transparency or context. Here are a few exercises that can strengthen critical thinking and improve commonsense reasoning:- Socratic Questioning: Inspired by the philosopher Socrates, this technique involves questioning assumptions, exploring consequences, and seeking alternative perspectives. Individuals can apply this method when interacting with AI, particularly when presented with a conclusion or recommendation. For example, when AI suggests a particular route on GPS, ask questions like: What’s another possible route? What assumptions did the AI make? Are there factors it might have missed?

- Scenario Analysis: This exercise entails examining hypothetical scenarios to anticipate outcomes and understand potential consequences. For instance, in a business context, employees could analyze a decision-making process where AI might be used to forecast sales or assess risk. Considering alternative scenarios can help users evaluate AI recommendations critically, understanding when it’s appropriate to trust AI’s output and when additional human input may be necessary.

- Evaluating Sources of Information: To counter misinformation, it’s essential to identify credible sources and understand how AI algorithms may prioritize certain content. Encouraging users to assess the origin and reliability of data can help them discern factual information from sensationalism. This is particularly important with social media, where AI-driven content can easily reinforce biases.

- Encouraging a Questioning Mindset Toward AI Information

Embracing a questioning mindset toward AI-generated information is essential for maintaining commonsense reasoning. Instead of passively accepting AI’s outputs, individuals should actively interrogate the “why” and “how” behind AI-generated suggestions.- Ask “How Did AI Arrive at This Conclusion?”: Most AI systems use complex algorithms based on past data, but this data can reflect biases, errors, or limitations. By asking questions about AI’s methodology, users can gain a better understanding of its conclusions. For instance, if an AI tool recommends a particular medical treatment, patients and doctors should inquire about the underlying data that informed this recommendation.

- Seek Out Contrasting Views: When using AI for research or information gathering, it’s beneficial to seek out diverse perspectives. This helps counteract the effects of echo chambers and can reveal gaps in AI’s knowledge or assumptions. For example, if an AI-driven news feed heavily favors one viewpoint, actively searching for opposing perspectives can provide a more comprehensive understanding of an issue.

- Understand AI’s Limitations: AI is not omniscient—it makes errors, and these mistakes can sometimes have significant consequences. Encouraging a realistic understanding of AI’s capabilities helps users maintain a healthy level of skepticism. This awareness can prevent overreliance on AI, encouraging users to apply their judgment, particularly in high-stakes situations where commonsense plays a crucial role.

Educational Initiatives

- Integrating Critical Thinking into the Curriculum

Schools and universities play a crucial role in preparing individuals for a future where AI is ubiquitous. By incorporating critical thinking exercises into the curriculum, educators can help students develop the skills needed to navigate an AI-influenced world thoughtfully. Effective critical thinking programs emphasize problem-solving, source evaluation, and reasoning exercises, all of which contribute to strengthening commonsense.- Debate and Discussion Modules: In structured debate sessions, students are encouraged to argue multiple sides of an issue, a practice that challenges them to consider perspectives outside their comfort zone. Topics such as “Is AI a reliable tool in decision-making?” or “Should we trust AI-driven news more than traditional journalism?” can spark thought-provoking discussions that promote analytical skills.

- Case Studies on AI Ethics: Introducing students to real-world AI ethics dilemmas helps them understand the consequences of overreliance on algorithms. Case studies might include examples of biased hiring algorithms, ethical concerns in AI-driven surveillance, or misinformation spread by social media algorithms. Examining these cases fosters a sense of responsibility and encourages students to question AI’s role in society critically.

- Programs That Integrate Ethics and AI Education

Beyond critical thinking, ethics education is essential in fostering a mindful relationship with AI. Ethical considerations help individuals navigate AI’s capabilities with caution and respect for broader societal implications.- AI Ethics Workshops and Seminars: Many educational institutions are now offering workshops and seminars on AI ethics, where students and professionals can learn about topics such as privacy, algorithmic bias, and the social implications of AI use. These workshops often feature case studies, guest lectures from AI professionals, and hands-on projects that explore the ethical dimensions of AI. By exposing individuals to ethical frameworks, these programs help build a foundation for responsible AI use.

- Collaborative AI and Ethics Research Projects: Schools can encourage students to collaborate on research projects examining the societal impacts of AI. For instance, students could research how facial recognition technology affects privacy or how AI-driven content recommendation systems influence public opinion. Such projects not only deepen students’ understanding of AI but also encourage a commitment to using technology in ways that benefit society.

- Programs Focused on Developing Digital Literacy

Understanding the basics of how AI works, what data it uses, and the inherent biases within these datasets is crucial for fostering informed and critical AI users. Digital literacy programs that demystify AI technology help individuals make better-informed choices.- Hands-On AI Labs: In AI-focused labs, students can experiment with building simple machine learning models or creating chatbots. These labs provide an opportunity to understand AI’s potential and limitations, fostering a healthy skepticism of AI’s “black box” nature. By working with AI at a fundamental level, individuals learn to appreciate the human decision-making involved in creating and deploying these systems.

- Media Literacy Courses: Since many AI applications interact with media and news content, media literacy programs can teach students how to recognize and counteract biases in AI-recommended information. This training helps students avoid blindly trusting AI-generated content and emphasizes the importance of diverse and accurate information sources.

By implementing these strategies and educational initiatives, individuals can learn to engage with AI from a place of strength and discernment. Rather than allowing AI to shape their thinking unchallenged, they can use technology as a tool to enhance their understanding, creativity, and commonsense. Cultivating a generation of AI-savvy critical thinkers ensures that our reliance on AI remains measured, informed, and aligned with the broader goals of societal progress.

- Reducing Natural Stupidity

While “natural stupidity” might sound harsh, it refers to tendencies and cognitive biases that everyone experiences. These biases and habits can lead to poor decision-making, especially when dealing with complex information or new technologies like AI. Reducing these patterns involves identifying the biases that contribute to lapses in commonsense reasoning and actively cultivating techniques to counteract them. By fostering mindfulness, reflection, and healthy debate, individuals can work toward improving their cognitive resilience and making more thoughtful decisions.

Identifying Cognitive Patterns

- Understanding Cognitive Biases that Contribute to Natural Stupidity

Cognitive biases are mental shortcuts that help us make decisions quickly, but they can also lead to errors in judgment. Recognizing these biases is the first step in reducing natural stupidity, as it helps us understand why we may overlook or misinterpret information.- Confirmation Bias: One of the most pervasive biases, confirmation bias is the tendency to seek out information that supports our existing beliefs while ignoring evidence that contradicts them. In an AI-driven world, this bias can be reinforced by algorithms that tailor content to our preferences, creating echo chambers that prevent us from seeing multiple perspectives. By acknowledging confirmation bias, we can consciously seek out opposing views, which strengthens our understanding and challenges our assumptions.

- Anchoring Bias: Anchoring bias occurs when we place too much emphasis on the first piece of information we receive, even if it’s not the most accurate. For instance, the initial output of an AI tool may unduly influence our decision-making, even if further research contradicts it. Recognizing this bias encourages a more cautious approach to initial data, prompting us to validate and compare information before making conclusions.

- Availability Heuristic: This bias leads us to overestimate the importance of information that comes to mind easily, often due to recent exposure. For instance, a widely circulated AI-generated headline may skew our perception of an issue because it’s fresh in our memory, even if it doesn’t accurately reflect broader realities. Awareness of this bias helps us put information in context, preventing us from overvaluing recent or highly visible information.

- Bandwagon Effect: The bandwagon effect is the tendency to adopt beliefs or follow trends simply because others do. In a digital age where AI-driven content can amplify popular trends, this effect can lead to uncritical acceptance of ideas. Identifying this bias encourages us to make independent judgments rather than following the crowd.

Cultivation Techniques

To counteract these biases and reduce natural stupidity, we can adopt practices that promote awareness, mindfulness, and critical thinking. These techniques foster a more deliberate approach to processing information, helping individuals make better decisions.

- Promote Mindfulness and Reflective Practices

Mindfulness involves cultivating an awareness of our thoughts, emotions, and surroundings. By practicing mindfulness, individuals can become more attuned to their cognitive biases and impulses, allowing them to respond thoughtfully rather than react impulsively. Here are a few methods to promote mindfulness:- Regular Self-Reflection: Set aside time each day to reflect on the decisions made throughout the day. Consider how each choice was influenced by cognitive biases or emotional states, and evaluate how these decisions align with long-term goals or values. This habit fosters a habit of reflection, making it easier to recognize biases as they arise.

- Mindful Information Consumption: Rather than scrolling aimlessly through digital content, approach information with a purpose. Pause before engaging with AI-recommended articles or videos and consider why these recommendations were made. Practicing mindful media consumption helps reduce susceptibility to digital influence, as individuals learn to question the motivation behind certain information.

- Emotional Awareness: Our decisions are often shaped by emotions, which can cloud judgment. Developing emotional awareness enables individuals to recognize when they’re reacting from a place of stress, anger, or excitement, rather than logical reasoning. Techniques like deep breathing, journaling, and meditation can help cultivate this awareness, promoting a clearer, more balanced approach to decision-making.

- Encourage Engagement in Discussions that Challenge Assumptions

Thought-provoking discussions help us recognize and overcome our biases by exposing us to diverse viewpoints. Engaging with people who hold different perspectives encourages open-mindedness, curiosity, and critical thinking.- Structured Debates and Group Discussions: Whether in educational settings or workplace environments, structured debates provide a platform for exploring multiple perspectives on an issue. By actively listening to others’ arguments and defending their own, individuals learn to scrutinize their beliefs, question assumptions, and consider alternative viewpoints.

- Playing Devil’s Advocate: In any group discussion, assigning someone the role of “devil’s advocate” encourages participants to anticipate opposing arguments. This practice is particularly valuable in settings where decision-making can benefit from rigorous analysis, such as business strategy meetings or policy discussions. By challenging assumptions, devil’s advocacy helps expose potential weaknesses in reasoning, promoting more informed decisions.

- Diverse Social Circles: Engaging with people from diverse backgrounds fosters open-mindedness by exposing individuals to different cultural, social, and intellectual perspectives. Encouraging friendships and interactions across various communities can broaden one’s worldview, reducing the risk of cognitive biases that come from insular thinking.

- Practical Exercises for Building Commonsense Reasoning

Commonsense reasoning skills can be strengthened through practical exercises that challenge individuals to think outside their comfort zones. Here are a few exercises designed to enhance critical thinking and reflective decision-making:- Journaling Prompts on Decision-Making: Regularly writing about decision-making experiences encourages individuals to reflect on how their cognitive biases influence choices. Prompting questions, such as “Did I consider alternative options?” or “What biases might have affected my decision?” help individuals build awareness and refine their reasoning process.

- Scenario Planning and Role-Playing: By simulating real-life scenarios, individuals can practice making decisions in a controlled environment. For example, role-playing a crisis situation where AI advice conflicts with commonsense reasoning allows individuals to evaluate the limits of AI recommendations and strengthen their decision-making skills.

- Critical Thinking Challenges: Engaging in logic puzzles, brain teasers, or thought experiments can help train the brain to approach problems analytically. These exercises improve cognitive flexibility, promoting a more balanced and rational approach to decision-making that counters natural tendencies toward impulsive or biased judgments.

Through these techniques, individuals can cultivate a mindset of thoughtful, critical engagement that counters natural stupidity. By recognizing cognitive biases and actively working to counteract them, we can foster commonsense reasoning skills that not only complement AI’s capabilities but also protect us from overreliance on technology. This approach ensures that as we integrate AI into our lives, we retain our agency, perspective, and intellectual independence, using technology as a supportive tool rather than a substitute for thoughtful decision-making.

- Using AI as an Enhancing Tool

AI holds significant potential to improve productivity, assist in decision-making, and streamline tasks in various fields. However, using AI effectively requires a thoughtful approach that prioritizes human oversight and critical thinking. This section provides practical recommendations for leveraging AI as a supportive tool while setting clear boundaries to prevent overreliance and maintain intellectual independence.

Practical Recommendations

- Leverage AI for Research and Informed Decision-Making While Maintaining Oversight

AI can process vast amounts of data quickly, making it an excellent tool for research and gaining insights. However, it’s essential to approach AI-generated information with a critical eye, using it as a foundation for further exploration rather than as a definitive answer.- Use AI to Enhance Data Analysis: AI tools can rapidly analyze trends, detect patterns, and perform predictive modeling. For instance, businesses can use AI-driven analytics to forecast market trends or identify customer preferences. However, rather than relying solely on these insights, decision-makers should cross-check findings with historical data, expert opinions, or industry-specific knowledge to ensure well-rounded and accurate conclusions.

- Support, Don’t Substitute, Human Expertise: AI is valuable for offering options and potential solutions, but it should not replace domain-specific expertise. For example, doctors may use AI to help diagnose patients or suggest treatments, but their training and experience are essential for final diagnosis and patient care. Encouraging professionals to view AI as a supportive tool ensures that they retain ultimate decision-making authority, keeping commonsense and human intuition at the forefront.

- Apply AI to Streamline Administrative and Routine Tasks: AI’s strengths lie in automating repetitive processes. In fields like accounting, HR, and customer service, AI can handle tasks such as data entry, appointment scheduling, and responding to basic inquiries. Using AI for these tasks frees up time for human workers to focus on creative, complex, and strategic activities where their judgment and expertise are most valuable.

- Developing Verification Practices

Given AI’s capacity to generate vast amounts of information, it’s essential to adopt verification practices to ensure accuracy and reliability. AI outputs are not immune to errors, biases, or outdated information, and double-checking facts is necessary to prevent misinformation.- Cross-Reference Information: Rather than treating AI-generated insights as absolute, cross-check them with multiple sources. For example, if AI provides a summary of a news topic, verify key points by consulting trusted news outlets to confirm their accuracy. This practice is particularly important in fields such as finance, journalism, and legal work, where accuracy directly impacts decision-making.

- Validate Against Real-World Feedback: In areas where AI makes predictions, such as weather forecasting or market trends, it’s essential to monitor how AI predictions compare to actual outcomes. This feedback loop allows users to gauge AI accuracy over time, developing a clearer sense of when and how to rely on AI’s insights.

- Employ Human-in-the-Loop Systems: Integrating human oversight into AI processes ensures that AI outputs are reviewed by knowledgeable experts, particularly in high-stakes environments like healthcare and law. In a human-in-the-loop model, AI assists with preliminary analysis, but human experts review and validate final conclusions, making it possible to catch errors and apply commonsense reasoning where AI may fall short.

Setting Boundaries

- Importance of Establishing Limits on AI Reliance

To avoid the risks of overreliance, setting clear boundaries on how and when to use AI is essential. These boundaries help ensure that human judgment remains a priority, especially when critical thinking or ethical considerations are involved.- Define Clear Roles for AI in Decision-Making Processes: Setting boundaries means identifying specific areas where AI should play a supportive role rather than a primary one. For instance, in industries like finance, while AI may assist in financial modeling, final investment decisions should involve human input to evaluate risks, ethical implications, and long-term strategies.

- Limit AI’s Role in High-Emotion or Complex Social Interactions: AI should not replace human interaction in settings where empathy, understanding, and moral judgment are paramount. For example, while AI chatbots can handle basic customer service inquiries, human agents should manage sensitive customer issues. Similarly, in education and mental health services, maintaining human connection ensures that clients receive appropriate emotional support and personalized care.

- Establish Time Limits on AI Use for Cognitive Tasks: In environments where individuals may rely heavily on AI to generate ideas, solve problems, or complete tasks, it’s beneficial to set limits on AI usage. For instance, in creative fields like writing, design, or marketing, professionals might limit the time spent using AI tools to prevent overdependence and maintain originality. By setting a “use it, then leave it” policy, individuals are encouraged to think independently and exercise their creativity.

- Promote Regular “Digital Detox” Periods

Regularly unplugging from AI-based technologies can help individuals reconnect with their natural thinking processes and reflect on their experiences without digital assistance. “Digital detox” periods provide space to practice critical thinking and commonsense reasoning without the aid of algorithms.- Scheduled Breaks from AI-Driven Tools: In workplaces that depend on AI-based productivity software, encouraging employees to take breaks from technology can help them recalibrate. For instance, a company might implement a “tech-free” hour each day to allow employees to brainstorm, discuss, or analyze data without digital assistance.

- Reflection on AI-Dependence During Detox Periods: Digital detox periods provide an opportunity for self-assessment. Individuals can reflect on how AI influences their decision-making, exploring ways to reduce overreliance. These reflections may include journaling about the role AI plays in daily life or discussing AI’s influence with peers, which can foster awareness and mindfulness.

By setting boundaries and maintaining oversight, individuals and organizations can use AI as a supportive tool that complements, rather than replaces, human intelligence. Thoughtful engagement with AI encourages a balanced approach to technology, preserving commonsense, fostering independent thought, and ensuring that AI serves as an enhancer of human experience rather than a crutch. This approach keeps humans at the center of decision-making, empowering us to use AI ethically and effectively while remaining anchored in our own intellectual and creative capacities.

Dangers of Bias and Algorithmic Errors

As AI becomes increasingly integrated into decision-making, it’s essential to recognize its limitations, particularly in areas prone to bias and error. Despite advancements, AI models are only as good as the data they are trained on, and they may inadvertently reinforce harmful biases or produce errors with significant real-world consequences. This section explores the dangers of bias and algorithmic errors in AI, highlighting real-world examples and providing strategies for verifying AI-generated information to promote accurate and ethical decision-making.

Understanding Risks

- Highlighting Examples of AI Biases and Their Real-World Consequences

AI biases arise when algorithms are trained on datasets that reflect existing societal prejudices or imbalances. These biases can lead to unfair treatment, discriminatory outcomes, and even safety risks, particularly in sensitive fields like hiring, law enforcement, healthcare, and finance.- Hiring Bias: AI-driven hiring tools, trained on historical data from predominantly male-dominated industries, have been shown to favor male applicants over female ones. In one notable case, a hiring algorithm was found to systematically downrank resumes containing the word “women’s,” reflecting historical gender bias in hiring. This example underscores the importance of ensuring that AI doesn’t perpetuate inequality in employment.

- Racial and Socioeconomic Bias in Criminal Justice: AI systems used in law enforcement have shown a tendency to produce racially biased outcomes. Predictive policing algorithms, for example, can target specific neighborhoods based on historical arrest data, which may already be skewed due to prior over-policing in certain communities. As a result, these systems can disproportionately affect people of color, reinforcing a cycle of inequity and mistrust in the justice system.

- Healthcare Disparities: In healthcare, some AI algorithms have exhibited biases that impact patient care. For instance, algorithms predicting healthcare needs may favor wealthier patients who historically had better access to medical services, leading to disparities in the quality of care provided to underprivileged communities. Such biases can exacerbate existing health inequalities, with severe implications for patient outcomes.

- Financial Exclusion: AI models used for credit scoring or loan approvals may factor in data that inadvertently disadvantages specific demographic groups. For example, by penalizing applicants from zip codes associated with lower income or minority populations, AI-based lending models may systematically restrict financial access for certain groups, limiting economic mobility and perpetuating cycles of poverty.

- Algorithmic Errors with High-Stakes Consequences

Beyond bias, AI can also produce outright errors, which can be problematic or dangerous depending on the application. For instance:- Medical Diagnostics: In healthcare, even small algorithmic errors can lead to misdiagnoses or incorrect treatment recommendations. If AI misinterprets medical imaging data, it could lead to a delayed or incorrect diagnosis, potentially endangering a patient’s life. This underscores the need for human oversight in medical decision-making and the importance of verifying AI outputs with expert analysis.

- Autonomous Vehicles: AI errors in self-driving cars have led to accidents, sometimes with fatal outcomes. For example, if an AI system misinterprets its environment, it could fail to recognize pedestrians or other vehicles, resulting in severe safety risks. Continuous testing, validation, and regulatory oversight are essential to mitigate such risks in AI applications where human safety is paramount.

Verification Strategies

Given these risks, developing strategies to verify AI-generated information is critical. Taking steps to double-check AI recommendations, particularly in high-stakes settings, helps mitigate potential harm and promotes responsible use of technology.

- Cross-Referencing with Reliable Sources

Relying on AI alone can lead to misguided or incomplete conclusions, making cross-referencing a crucial practice for ensuring accuracy and reliability.- Consulting Trusted Data Sources: In professional fields like journalism, healthcare, and finance, cross-referencing AI-generated insights with reputable sources helps validate findings. For example, before acting on AI-suggested market trends, financial analysts can compare these insights with data from reliable financial news outlets and databases.

- Leveraging Human Expertise: AI outputs are more reliable when reviewed by subject matter experts who can interpret nuances that AI might overlook. In healthcare, for instance, radiologists should review AI-analyzed scans to ensure diagnoses align with medical knowledge. Human verification not only minimizes errors but also provides a comprehensive understanding of complex information.

- Cross-Checking Legal and Regulatory Standards: In regulated fields, compliance with legal and regulatory standards is essential. Cross-referencing AI-driven decisions against regulatory guidelines ensures that outputs adhere to established norms. For instance, AI recommendations in financial services should align with consumer protection laws, preventing outcomes that might violate ethical or legal standards.

- Engaging with Diverse Perspectives

AI is often limited by the narrow scope of its training data, which may not account for diverse perspectives or emerging trends. Engaging with a range of viewpoints encourages a more holistic understanding of the information presented and helps identify gaps or biases.- Encouraging Multidisciplinary Input: In fields such as healthcare or public policy, involving professionals from various disciplines (e.g., sociologists, ethicists, legal experts) in the review process can help reveal biases or oversights in AI-generated data. This interdisciplinary approach fosters more balanced decision-making, especially in fields where the impact of bias can have far-reaching consequences.

- Listening to Marginalized Voices: In cases where AI systems impact minority communities, seeking input from those affected can provide valuable insights into potential biases or unintended outcomes. For example, when implementing AI in community services or law enforcement, input from community representatives can reveal biases that might otherwise go unnoticed, leading to more inclusive and fair outcomes.

- Promoting Public and Peer Review: Peer review is a critical process for identifying biases in AI systems, particularly in research-driven environments. By sharing data and algorithms openly, AI developers and researchers invite constructive feedback that can uncover biases, validate findings, and ensure that AI models are robust and transparent.

- Implementing Human-in-the-Loop Verification

A human-in-the-loop approach provides a structured way to validate AI outcomes in high-stakes settings, combining AI’s efficiency with human judgment.- Regular Audits of AI Systems: Periodic audits of AI models ensure they continue to meet ethical and performance standards, especially as new data is incorporated. Audits can reveal hidden biases and assess whether AI outputs align with desired outcomes. In fields like law enforcement or healthcare, audits are essential for identifying discriminatory patterns or errors that may have emerged over time.

- Collaborative Review Teams: For AI systems used in corporate or public policy decisions, assembling review teams that include data scientists, ethicists, and domain experts promotes accountability and transparency. These teams can regularly evaluate the outputs of AI tools, ensuring they align with organizational goals and ethical standards.

- Continuous Training and Updating of AI Models: AI models require regular updates to reflect changing data, societal values, and industry standards. Continuous training helps prevent errors that arise from outdated data or obsolete models, enhancing the relevance and fairness of AI-driven recommendations.

Understanding and addressing AI biases and errors is essential to responsible AI use. Through careful verification, cross-referencing, and human oversight, organizations and individuals can reduce the risks associated with biased or erroneous AI outputs. By actively engaging diverse perspectives, implementing thorough review processes, and integrating human judgment, we can ensure AI serves as an ethical and accurate tool that complements, rather than undermines, informed decision-making.

VIII. The Learning Nature of AI

One of AI’s most remarkable characteristics is its ability to learn continuously from data and improve its performance over time. Unlike traditional systems, which rely on fixed rules, AI can adapt and evolve, refining its outputs based on new information and user feedback. However, this iterative process also means that errors are an inevitable part of AI’s journey, serving as opportunities for both machines and humans to learn and grow. In this section, we’ll explore the dynamic, learning-based nature of AI, emphasizing the importance of feedback and the role of mistakes in fostering improvement.

Continuous Learning and Feedback

- Understanding AI’s Iterative Learning Process

Machine learning and other AI models rely on data to recognize patterns, generate insights, and make predictions. This process, known as iterative learning, allows AI systems to adjust their responses based on historical data, user inputs, and feedback, leading to continuous improvement.- Training and Retraining Models: AI models are initially trained on a dataset to recognize certain patterns or make predictions. As these models encounter new data, they are retrained to incorporate recent trends and patterns. For example, a recommendation algorithm for an online retailer learns from users’ shopping patterns; as consumer preferences evolve, the algorithm retrains to adjust its recommendations, keeping them relevant to current trends.

- Importance of Data Quality: The quality of data fed into an AI model plays a significant role in its accuracy and reliability. Poor or biased data can lead to faulty models, which is why continuous learning also involves updating and cleansing the data used to train AI. For instance, in healthcare applications, AI must rely on up-to-date, high-quality patient data to provide accurate diagnostics or treatment recommendations.

- Real-World Feedback as an Essential Input: AI systems benefit immensely from real-world feedback, whether through user responses, system audits, or external validation. For instance, in customer service, chatbot responses can be fine-tuned based on user satisfaction ratings. Incorporating real-time feedback helps AI systems adjust to diverse and dynamic scenarios, enhancing their utility and reducing the likelihood of recurring errors.

- Role of Human Oversight in Learning

Human oversight is essential to guide AI learning, especially in contexts requiring nuanced judgment or ethical considerations. Humans can spot issues, provide valuable feedback, and steer AI models away from potentially harmful patterns, supporting a more balanced and accurate learning process.- Manual Adjustments for Complex Situations: In situations where AI might struggle with ambiguous data or ethical considerations, human intervention can direct the model’s learning in a more ethical or contextually appropriate way. For instance, in autonomous driving technology, engineers may adjust models to account for edge cases, like unpredictable pedestrian behavior, that require more sophisticated decision-making than AI alone can handle.

- Ethical Monitoring and Adjustments: As AI systems learn and adapt, ongoing ethical oversight is necessary to ensure they do not inadvertently adopt or amplify biases. In fields like recruitment, monitoring ensures that the AI’s learning process aligns with fair hiring practices, with adjustments made whenever signs of discriminatory bias emerge.

Embracing Errors

- Encouraging Acceptance of Mistakes as Part of Learning for Both AI and Humans

Mistakes are inevitable in both AI development and human learning. Acknowledging this as part of the process allows both humans and machines to learn and improve iteratively. By embracing errors, we foster resilience and adaptability in AI systems while encouraging a culture of continuous learning among users and developers.- Errors as Feedback for AI Refinement: In machine learning, errors are treated as feedback that informs future learning cycles. For instance, in predictive text or voice recognition, incorrect outputs (such as misinterpreted words) provide data for model refinement, helping to correct these errors in subsequent iterations. The more feedback an AI receives, the better it becomes at distinguishing correct from incorrect predictions.

- Human Adaptability in Response to AI Errors: AI errors also offer learning opportunities for humans, who can build critical thinking and problem-solving skills by analyzing these mistakes. For instance, medical practitioners using AI for diagnostics may notice inconsistencies and learn to interpret AI suggestions as part of a larger diagnostic toolkit, not an infallible source. This balanced approach empowers professionals to view AI as a support tool rather than a replacement for expertise.

- Building a Growth Mindset around AI Limitations

Accepting AI’s limitations and encouraging a growth mindset around its use helps individuals and organizations leverage its capabilities while mitigating risks. Emphasizing the value of learning from mistakes fosters resilience and adaptability, equipping users to handle AI shortcomings constructively.- Promoting Transparency around AI Performance: Users are more likely to trust AI when its limitations are acknowledged openly. Companies can help manage expectations by being transparent about the performance and potential errors of AI systems, particularly in high-stakes fields. For example, AI-driven healthcare tools should provide disclaimers clarifying that their predictions are based on patterns and probabilities, not certainties.

- Learning from Mistakes to Improve Future Design: Organizations can use AI errors to enhance future AI design, creating more robust models over time. For example, companies developing facial recognition technology may identify inaccuracies with specific demographic groups, using these insights to re-evaluate and improve data diversity in training sets.

- Cultivating a Culture of Continuous Improvement

Treating AI as a work in progress, rather than a finished product, encourages a culture of continuous learning and adaptability. This mindset is beneficial both for AI developers, who iterate and improve models, and for users, who learn to navigate AI capabilities with realistic expectations.- Incorporating User Feedback Loops: Involving users in AI development by collecting and analyzing their feedback encourages AI to evolve in ways that are user-centered and responsive to real-world needs. Companies that develop chatbots, for instance, can actively seek user input to adjust language, tone, and response accuracy, refining AI functionality over time.

- Viewing AI as a Complement to Human Insight: Reinforcing the concept of AI as a complement rather than a replacement for human insight encourages users to integrate AI thoughtfully into their workflows. In academic research, for example, AI can streamline literature reviews, but human researchers are essential for interpreting findings within broader theoretical and practical frameworks.

By recognizing and embracing AI’s learning nature, we build a foundation for using it wisely and effectively. Accepting that both AI and humans will make mistakes encourages a growth-oriented approach that focuses on learning and improvement. With this mindset, users can harness AI as a supportive, evolving tool—one that enhances, rather than replaces, human judgment and intellectual autonomy.

- Conclusion

In an era dominated by rapid technological advancements, it’s crucial to prioritize the development of natural intelligence alongside the use of AI. While AI offers powerful tools that can enhance our abilities, our capacity for commonsense reasoning, critical thinking, and personal judgment remains irreplaceable. This balance is key to using AI responsibly—as a supplement to, rather than a substitute for, human decision-making.

Reinforcing the Importance of Natural Intelligence

Our innate ability to think critically, exercise judgment, and learn from experience forms the foundation for a balanced approach to AI. By nurturing our natural intelligence, we become better equipped to understand the context, assess information, and make informed decisions, ensuring that AI remains a tool for enhancement rather than a crutch. Embracing this approach helps us navigate complex information landscapes with greater clarity and resilience, guarding against potential downsides like overreliance or diminished critical thinking.

A Call to Action: Engage Actively with AI and Cultivate Personal Reasoning

Active engagement with AI tools is essential—not only to harness their capabilities but also to cultivate our analytical skills and independent reasoning. As we incorporate AI into our personal and professional lives, we should question, verify, and critically assess the information it presents. This process strengthens our natural intelligence and builds a mindset that values AI as a complementary partner, rather than as a sole authority.

Vision for a Collaborative Future

Imagine a future where humans and AI work together to promote deeper understanding, critical thinking, and ethical decision-making. This collaboration could redefine education, healthcare, business, and society at large, with AI assisting in tasks that amplify human potential rather than detract from it. Such a future is achievable if we remain committed to the responsible and mindful use of AI, prioritizing ethical practices and constant learning.

Supporting the MEDA Foundation

As we strive toward a balanced relationship with AI, consider supporting organizations that champion this vision. The MEDA Foundation works tirelessly to promote critical thinking, ethical technology, and education initiatives that empower individuals to navigate our AI-driven world responsibly. Donations to the MEDA Foundation contribute to creating resources, programs, and educational opportunities that foster both technological literacy and independent thought.

Recommended Reading for Deeper Insight

For those interested in exploring these concepts further, consider the following books:

- Artificial Intelligence: A Guide for Thinking Humans by Melanie Mitchell

- Algorithms of Oppression: How Search Engines Reinforce Racism by Safiya Umoja Noble

- Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy by Cathy O’Neil

- Human Compatible: Artificial Intelligence and the Problem of Control by Stuart Russell

Through mindful engagement with AI, continued learning, and a commitment to enhancing our natural intelligence, we can build a future where technology serves humanity’s highest aspirations for wisdom, ethical clarity, and innovation.